We are quite busy nowadays with GPUs, but wanted to give you a quick update on how things are going with the GPU, Let’s start with the UI

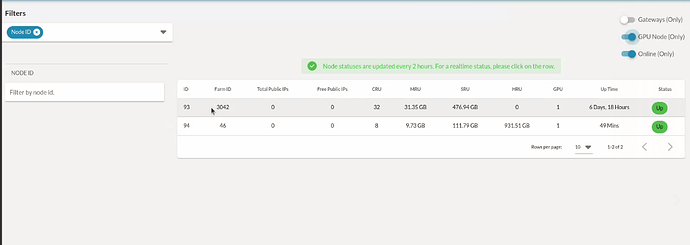

Explorer

- A new filter for GPU supported node is going to be added

- gpu count

- filtering capabilities based on the model / device

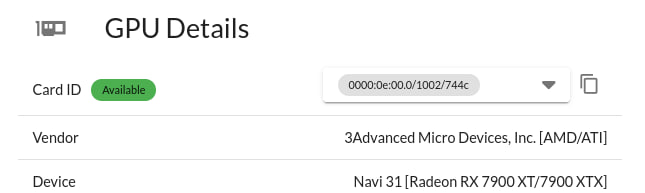

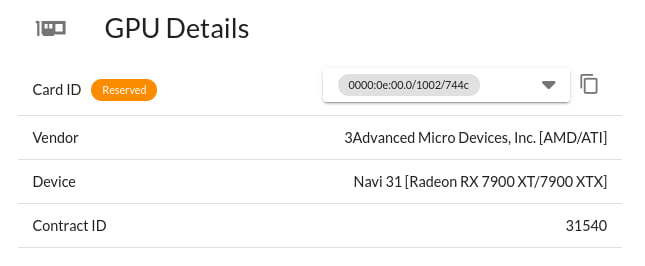

On the details pages we will be showing the card information and its status (reserved or available) also the ID that’s needed to be used during deployments is easily accessible and has a copy to clipboard button

Here’s an example of how it looks in case of reserved

PR has videos inside: https://github.com/threefoldtech/tfgrid-sdk-ts/pull/706

Dashboard

The dashboard is where to reserve the nodes the farmer should be able to set the extra fees on the form and the user also should be able to reserve and get the details of the node (cost including the extrafees, GPU informations) [In progress]

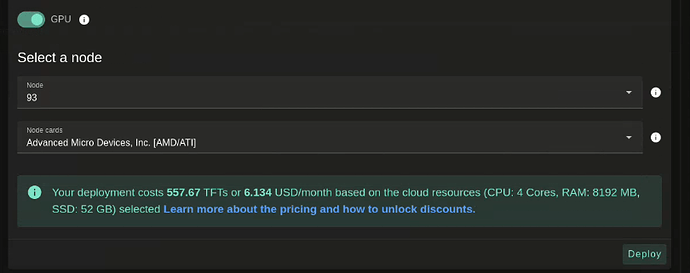

Playground

Currently the playground is the easiest way to deploy a VM, a new option GPU is added to the filters

That means it will limit the criteria of the search to the nodes you rented that has GPU, once it finds nodes it will also show a list of the available GPUs to use in the VM

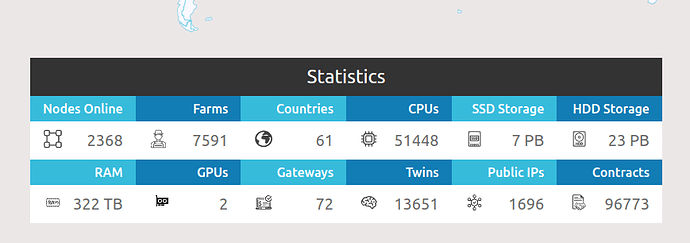

Statistics View

A simple card with GPUs count been added

Testing

There’s an in progress test suite we are developing and definitely all kinds of help is appreciated to expand and review our testing plan

Now the upcoming part is more technical on GPU support itself as a feature across components like the the chain, zos, and the underlying libraries

TFChain

- On TFChain there will be a small migration to store the information if the node has_gpu/or gpu devices count on the node and the extra price the farmer wants to receive as a fee ADR Link

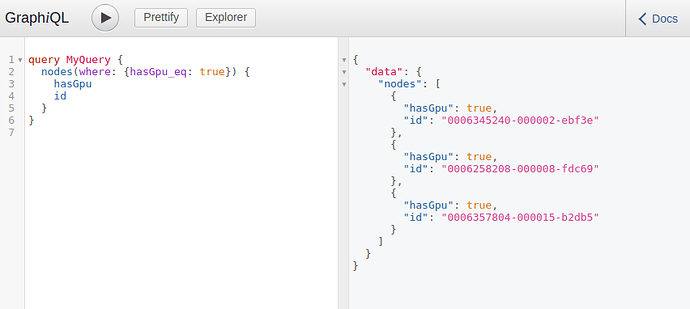

graphql

Can query the nodes on devnet using such query

query MyQuery {

nodes(where: {hasGpu_eq: true}) {

hasGpu

id

}

}

ZOS and 3Nodes

- ZOS support for detecting and enabling the GPU for a specific ZMachine (VM) is now merged https://github.com/threefoldtech/zos/pull/1973

- zos now has listGPUs rmb call that allows querying the node’s GPUs in the form of

id: '0000:0e:00.0/1002/744c',

vendor: 'Advanced Micro Devices, Inc. [AMD/ATI]',

device: 'Navi 31 [Radeon RX 7900 XT/7900 XTX]',

contract: 31540

}

Where vendor, device are quite self explanatory, but for contract is the contract that has GPU reserved, and ID specifics that exact slot along with the vendor and the device separated by slashes <SLOT>/<VENDOR>/<DEVICE>

Grid Proxy

Grid proxy provides a rest interface on top of graphql and some extra logic on top of the indexer database

- node details endpoint returns gpu number https://gridproxy.dev.grid.tf/nodes/93

{"id":"0006258208-000008-fdc69","nodeId":93,"farmId":3042,"twinId":3181,"country":"Belgium","gridVersion":5,"city":"Berchem","uptime":595827,"created":1686828732,"farmingPolicyId":1,"updatedAt":1687430796,"capacity":{"total_resources":{"cru":32,"sru":512110190592,"hru":0,"mru":33665941504},"used_resources":{"cru":0,"sru":107374182400,"hru":0,"mru":3366594150}},"location":{"country":"Belgium","city":"Berchem","longitude":4.4294,"latitude":51.1904},"publicConfig":{"domain":"","gw4":"","gw6":"","ipv4":"","ipv6":""},"status":"up","certificationType":"Diy","dedicated":false,"rentContractId":31805,"rentedByTwinId":26,"serialNumber":"M80-CA013501994","power":{"state":"","target":""},"num_gpu":1,"extraFee":0}

- stats endpoint https://gridproxy.dev.grid.tf/stats also now returns total number of gpus on the grid

{"nodes":82,"farms":4257,"countries":11,"totalCru":1010001108,"totalSru":100088947416594522,"totalMru":100012174259244860,"totalHru":321326156620739,"publicIps":417,"accessNodes":8,"gateways":6,"twins":3013,"contracts":31826,"nodesDistribution":{"":1,"42656c6769756d":1,"Belgium":40,"EG-1656487239":1,"Egy":1,"Egypt":23,"Germany":1,"SomeCountry":1,"United States":11,"egypt":1,"someCountry":1},"gpus":3}

The gridproxy will also be providing some search capabilities to search by vendor or a device [Still in progress]

Farmerbot

There’s an upcoming update be able to find nodes with GPUs for more details please check the issue

Clients

Golang

Client PR https://github.com/threefoldtech/tfgrid-sdk-go/pull/207/ supporting the new calls to listGPUs and to deploy a machine with GPUs

Here’s an example of how to deploy using the go client (part of our integration tests)

func TestVMWithGPUDeployment(t *testing.T) {

tfPluginClient, err := setup()

assert.NoError(t, err)

ctx, cancel := context.WithTimeout(context.Background(), 5*time.Minute)

defer cancel()

publicKey, privateKey, err := GenerateSSHKeyPair()

assert.NoError(t, err)

twinID := uint64(tfPluginClient.TwinID)

nodeFilter := types.NodeFilter{

Status: &statusUp,

FreeSRU: convertGBToBytes(20),

FreeMRU: convertGBToBytes(8),

RentedBy: &twinID,

HasGPU: &trueVal,

}

nodes, err := deployer.FilterNodes(ctx, tfPluginClient, nodeFilter)

if err != nil {

t.Skip("no available nodes found")

}

nodeID := uint32(nodes[0].NodeID)

nodeClient, err := tfPluginClient.NcPool.GetNodeClient(tfPluginClient.SubstrateConn, nodeID)

assert.NoError(t, err)

gpus, err := nodeClient.GPUs(ctx)

assert.NoError(t, err)

network := workloads.ZNet{

Name: "gpuNetwork",

Description: "network for testing gpu",

Nodes: []uint32{nodeID},

IPRange: gridtypes.NewIPNet(net.IPNet{

IP: net.IPv4(10, 20, 0, 0),

Mask: net.CIDRMask(16, 32),

}),

AddWGAccess: false,

}

disk := workloads.Disk{

Name: "gpuDisk",

SizeGB: 20,

}

vm := workloads.VM{

Name: "gpu",

Flist: "https://hub.grid.tf/tf-official-vms/ubuntu-22.04.flist",

CPU: 4,

Planetary: true,

Memory: 1024 * 8,

GPUs: ConvertGPUsToStr(gpus),

Entrypoint: "/init.sh",

EnvVars: map[string]string{

"SSH_KEY": publicKey,

},

Mounts: []workloads.Mount{

{DiskName: disk.Name, MountPoint: "/data"},

},

NetworkName: network.Name,

}

err = tfPluginClient.NetworkDeployer.Deploy(ctx, &network)

assert.NoError(t, err)

defer func() {

err = tfPluginClient.NetworkDeployer.Cancel(ctx, &network)

assert.NoError(t, err)

}()

dl := workloads.NewDeployment("gpu", nodeID, "", nil, network.Name, []workloads.Disk{disk}, nil, []workloads.VM{vm}, nil)

err = tfPluginClient.DeploymentDeployer.Deploy(ctx, &dl)

assert.NoError(t, err)

defer func() {

err = tfPluginClient.DeploymentDeployer.Cancel(ctx, &dl)

assert.NoError(t, err)

}()

vm, err = tfPluginClient.State.LoadVMFromGrid(nodeID, vm.Name, dl.Name)

assert.NoError(t, err)

assert.Equal(t, vm.GPUs, ConvertGPUsToStr(gpus))

time.Sleep(30 * time.Second)

output, err := RemoteRun("root", vm.YggIP, "lspci -v", privateKey)

assert.NoError(t, err)

assert.Contains(t, string(output), gpus[0].Vendor)

}

terraform

The support of GPUs is almost there, here’s an example

terraform {

required_providers {

grid = {

source = "threefoldtechdev.com/providers/grid"

}

}

}

provider "grid" {

}

locals {

name = "testvm"

}

resource "grid_network" "net1" {

name = local.name

nodes = [93]

ip_range = "10.1.0.0/16"

description = "newer network"

}

resource "grid_deployment" "d1" {

name = local.name

node = 93

network_name = grid_network.net1.name

vms {

name = "vm1"

flist = "https://hub.grid.tf/tf-official-apps/base:latest.flist"

cpu = 2

memory = 1024

entrypoint = "/sbin/zinit init"

env_vars = {

SSH_KEY = file("~/.ssh/id_rsa.pub")

}

planetary = true

gpus = [

"0000:0e:00.0/1002/744c"

]

}

grid-cli

will include a --gpu flag to deploy on a specific node you rented

Typescript

There’s a lot depending on the updates here, e.g All of our frontend components

Client PR https://github.com/threefoldtech/tfgrid-sdk-ts/pull/666

Here there’re couple of updates regarding finding nodes with GPU, querying node for GPU information and deploying with support of GPU

an example script to deploy with GPU support

import { DiskModel, FilterOptions, MachineModel, MachinesModel, NetworkModel } from "../src";

import { config, getClient } from "./client_loader";

import { log } from "./utils";

async function main() {

const grid3 = await getClient();

// create network Object

const n = new NetworkModel();

n.name = "vmgpuNetwork";

n.ip_range = "10.249.0.0/16";

// create disk Object

const disk = new DiskModel();

disk.name = "vmgpuDisk";

disk.size = 100;

disk.mountpoint = "/testdisk";

const vmQueryOptions: FilterOptions = {

cru: 8,

mru: 16, // GB

sru: 100,

availableFor: grid3.twinId,

hasGPU: true,

rentedBy: grid3.twinId,

};

// create vm node Object

const vm = new MachineModel();

vm.name = "vmgpu";

vm.node_id = +(await grid3.capacity.filterNodes(vmQueryOptions))[0].nodeId; // TODO: allow random choice

vm.disks = [disk];

vm.public_ip = false;

vm.planetary = true;

vm.cpu = 8;

vm.memory = 1024 * 16;

vm.rootfs_size = 0;

vm.flist = "https://hub.grid.tf/tf-official-vms/ubuntu-22.04.flist";

vm.entrypoint = "/";

vm.env = {

SSH_KEY: config.ssh_key,

};

vm.gpu = ["0000:0e:00.0/1002/744c"]; // gpu card's id, you can check the available gpu from the dashboard

// create VMs Object

const vms = new MachinesModel();

vms.name = "vmgpu";

vms.network = n;

vms.machines = [vm];

vms.metadata = "";

vms.description = "test deploying VM with GPU via ts grid3 client";

// deploy vms

const res = await grid3.machines.deploy(vms);

log(res);

// get the deployment

const l = await grid3.machines.getObj(vms.name);

log(l);

// delete

const d = await grid3.machines.delete({ name: vms.name });

log(d);

await grid3.disconnect();

}

main();

Manuals

It’s an in-progress effort, we are still quite busy to be code complete, but will have as soon as possible

Questions

Right now, seems the sizes of the images are going to be quite large if you can provide more insights or help improving the situation it’ll be amazing, right now the solution for us is provisioning a full virtual machine and the user manually install the drivers and the frameworks they need?

Thank you