Thanks for the report! This seems like an excellent route for those who want to ensure all data is removed in a more efficient manner than dd.

How to clear disks for DIY 3Nodes?

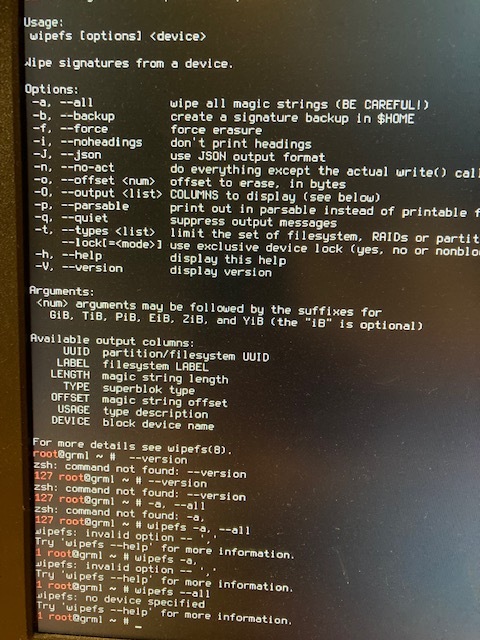

I am having a hard time erasing the hard drives using the gmrl distro.

fdisk does not wotrk

df does not work

and wipefs -a and other variations does not work

Can someone provide a basic command prompt?

This is just an example:

wipefs -a /dev/sda

Be careful with that command!

Example commands are in the original post above.

Great post.

I’ve heard by some farmers that it won’t work properly if you do not write sudo in front of the line of code.

If this is so, could we add this (sudo) on the original post? So people will include it when they read the instructions.

Thanks!

^This might have been what I was missing.

I gave up though. Since the 1.5TB or so HDD’s the 720 came with don’t add much to the node compared to the 4TB SSD’s I added I left the HDD’s as is.

Oh that’s sad. But at least now you have one more clue for troubleshooting this kind of problem. So perhaps later on you will try once more, in one of your maintenance windows. I try to parse my time on the 3nodes, otherwise it takes too much time in one session (for upgrades, etc.).

Also, if the HDD are not even connected to the Grid, you can simply remove them and their cables, it would take less power from the server. So more rewards, in a way!

yes please. In order to make changes to a disks partition table (layout) you need to be an administrator in the OS you are using. I you are not logged in ad root (or something similar in windows / macos) then the sudo command allows you to execute one single command as root / administrator. So indeed please add it to the original post.

Original post was written referencing GRML specifically, where you land on a root terminal by default. On the flip side, attempting to use sudo in this case might produce an error

I’ll edit the post to mention that root is needed.

Thanks for the additional information. It’s great.

If you see a

$sign rather than a#on the terminal, you’re not root.

I’ve learned something today! Nice to know.

So with wipefs -a /dev/sd* I was getting the message: probing initialization failed. Device or resource busy.

Command wipefs -af /dev/sd* fixed it.

Thanks for the heads up. Very nice to know it worked out.

I will add this for sure on the FAQ.

For the record NORMALLY when you get that error you are trying to wipe your boot USB by accident.

But why would -af work and not -a?

The f tag normally stands for force.

Hi there! I came here to share my experience

Yesterday I had my first 3node running

-

I followed the documentation and came the moment I needed to clear disks.

I tried gmrl option described above without success, it didn’t boot at all after some attempts… -

Then I booted with a ubuntu light version (lubuntu) on USB stick without any problem and was able to proceed to the wipefs commands (sometimes needed to force with -f) on all the /dev/sd* and /dev/nvme* partitions.

WelI I assume it went fine because I didn t know what to expect in terms of result.

Hence the question I have here:

How can we guaranry that the wipe process was successful, is there a way to check that?

Thx

So you are not booting at all? That sounds more like a boot media issue as opposed to an ssd clearing issue. You can assume an ssd issue of you get a ssd not found error.

I hear this from time to time. Not sure why some systems won’t boot grml, but I still like it as a first choice due to the small download size (400mb for the small version versus 1.4gb even for Ubuntu server edition).

This is a great question. After running some experiments here, I think the best way to check is running fdisk -l again. Specifically, you’re looking for the Disklabel type field. A totally clear disk won’t display any label type. If you see one, the process wasn’t successful.

Congrats! Thanks for sharing about your experience. I’ll update this posts with some clarifications based on what you wrote.

Yes, good point. The exception would be any distro that auto mounts other drives. I would suspect this to be the case for most any full desktop distro, whereas it is not the case for grml.

Hey @renauter

I too wanted to check if wiping my disks was done properly.

Here’s a post I wrote with some command lines to make sure it’s OK. Basically there’s many ways to find out:

For example:

Then to double check if the SSD was indeed only composed of zeroes, I used:

cmp /dev/sda /dev/zero

this line should give

cmp: EOF on /dev/sda

if there’s only zeroes on disk.

Basically a clear wiped disk will have:

wiped disk = no label, no partition, no filesystem, all zeroes

As Nelson said, I think you did wipe properly your disks though.

Do you still have trouble booting your 3node? If your 3node booted fine it means the wiping of the disks worked. Double check on the explorer if the 3node’s resources fit. (correct quantity of RAM + ssd, etc.)

Happy Farming!