Wanted to add some documentation on the theory and goal behind my post above so that if anyone has a thought about forks for a better way to accomplish the same goal, my functional goal is this,

Goal: Establish the ability for a home user to host multiple publicly accessible three nodes within the confines of a single ip address.

Relation to mission: truly decentralized internet requires overcoming the challenge of the current real estate shortage for routable space on the internet.

Secondary goals:

Minimal resource usage

Secure package transmissions

Predictable, but actively configurable routes.

100% open source

Significant challenges

Integration into existing technology

Need for maintained zero configuration

Rapid adoptability

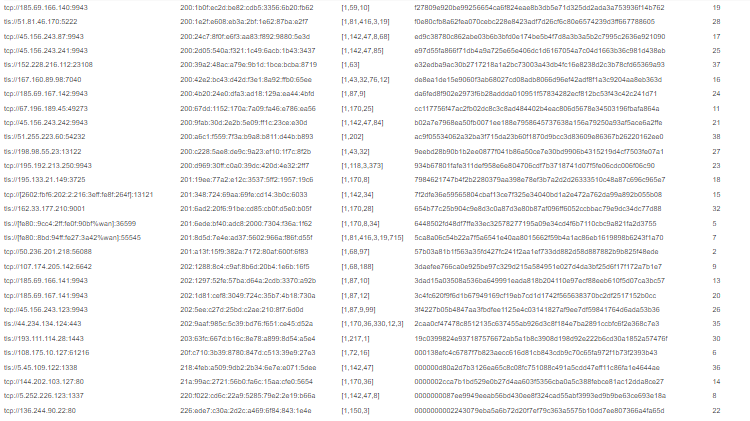

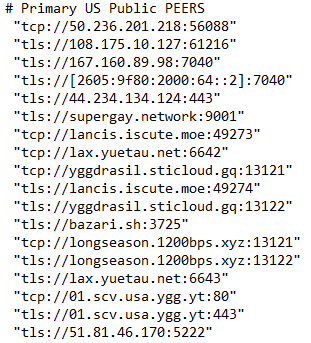

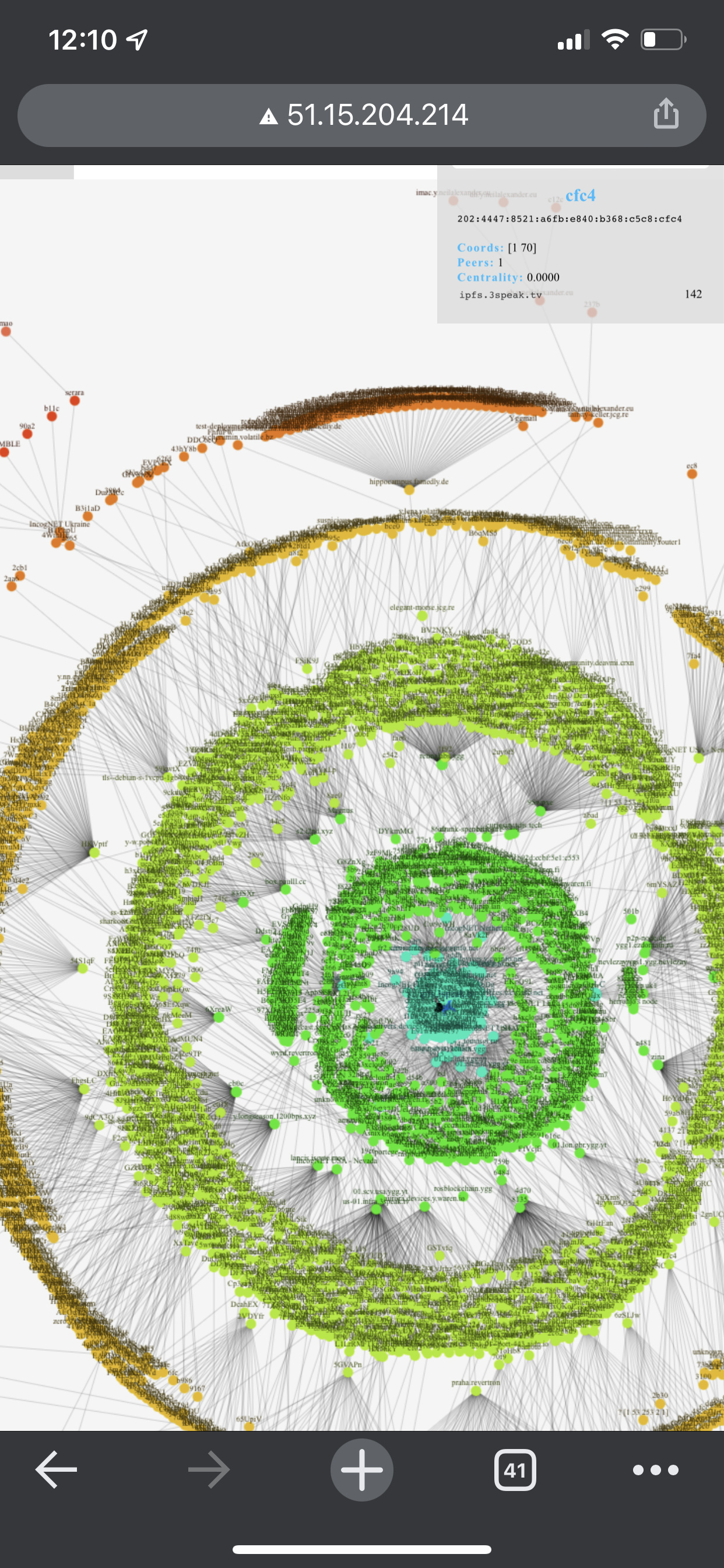

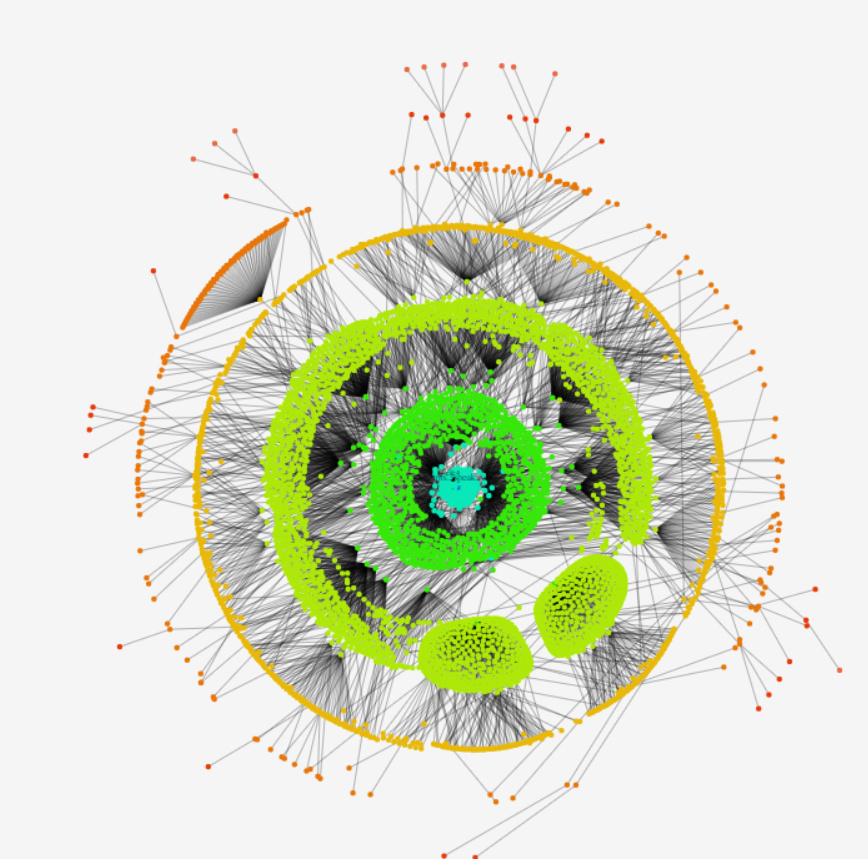

With a clear path set and having done a few hours of familiarization I think the answer to this problem is within zero os and tools that we are all familiar with being used in the traditional brick and mortar web hosting. Currently zos can receive external commands without a public address through its Yggdrasil interface, as is today. But, there is a roadblock in that interaction for the public because it would require them to setup the interface on their device and configure. Prompting the need for a configuration that allows a node that does have access to a public ip to support his/her neighbors and provide routing for them. This would effectively be creating a communication layer unique to threefold, that because we have control of both ends of the connection can be much more effective than traditional routing.

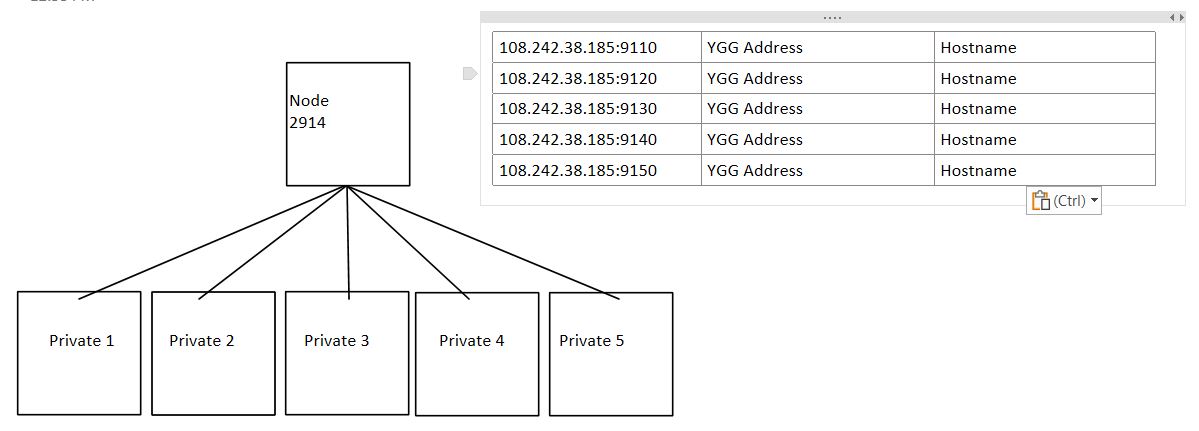

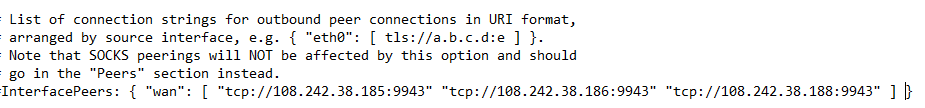

I think the answer to this lies within Yggdrasil, creating a subsystem that is able to deploy configurations to Yggdrasil within zos on a private ip node would allow that node to initiate a peer-peer tunnel to a public ip node and use that nodes public ip with the private ip servers resources.

The problem is this would still require someone to go buy a massive number of ip addresses, so how do we fix that, I think there is two options here, channeling a single ip out by port within the threefold network, or using SNI. A switch outside of the threefold network to SNI wouldn’t be realistic because not every device supports it and it could get pretty wonky with conflicts, we don’t have that problem here though, because again we control both end points of the communication in this scenario. Something as simple as each given interface being named sequentially could prevent that when the block chain does the naming.

So client 1 chooses a farm based on available hardware that doesn’t have a public IP, a lower resource farm has one available and has a great connection rating so they add that public IP onto their order. This creates a task that updates the ygg config on both machines adding the two nodes to each others peers list. A vm is deployed on the public ip holding machine and it directs traffic through ygg to named host on the private ip

Mount your waders, were about to get deep, under the current configuration this could also allow a machine to change its ip or fail over a ip from one node to another in the same farm automatically, making a failure transparent outside of the threefold network. Instead of configuring the public ipv4 to an individual node all nodes with access to the same public subnet could be configured so that when a client chooses that ip address the farm queries all nodes for status and assigns that static address to the node with the least current load. If that node goes offline the process then repeats allowing another nodes nic to grab that ip address and redeploy the forwarding automatically.

If there is one thing no other network can currently offer it is abundant, affordable, public address space. By using to Yggdrasil to create a ipv6 only “transport layer” between nodes we can create a publicly accessible network that wont have to compete with other providers because we will be able to offer them something no one else can. With the technology available today we can turn every ip address available on threefold into 10 public addresses, reduce worldwide network overloads, and implement new technologies across a worldwide deployment on the fly.

Love it!

Love it!

I’ll take some more time to digest what you’re suggesting about a potential Helium integration too.

I’ll take some more time to digest what you’re suggesting about a potential Helium integration too.