I’ll try again now… Give me 10 mins.

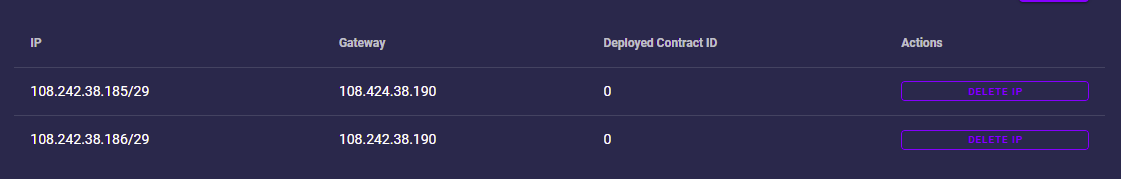

Verify configuration for public IPs

of course! Thank you very much!

I still cannot reach the public IP… sorry.

Default Gateway:

➜ terraform-dany git:(main) ✗ ping 178.250.167.65

PING 178.250.167.65 (178.250.167.65) 56(84) bytes of data.

64 bytes from 178.250.167.65: icmp_seq=1 ttl=242 time=179 ms

64 bytes from 178.250.167.65: icmp_seq=2 ttl=242 time=173 ms

64 bytes from 178.250.167.65: icmp_seq=3 ttl=242 time=123 ms

Check - can get there.

(New) vm deployed and ping / tracepath:

1?: [LOCALHOST] pmtu 1500

1: _gateway (192.168.0.1) 31.778ms asymm 2

1: _gateway (192.168.0.1) 32.308ms asymm 2

2: 5.195.3.81 (5.195.3.81) 101.101ms

3: 94.56.186.5 (94.56.186.5) 41.899ms

4: 86.96.144.36 (86.96.144.36) 12.336ms

5: 86.96.144.18 (86.96.144.18) 1000.436ms asymm 6

6: 195.229.1.76 (195.229.1.76) 175.342ms

7: 195.229.3.175 (195.229.3.175) 306.793ms

8: AMDGW2.arcor-ip.net (80.249.208.123) 185.910ms

9: de-dus23f-rb01-be-1050.aorta.net (84.116.191.118) 239.203ms asymm 11

10: ip-005-147-251-214.um06.pools.vodafone-ip.de (5.147.251.214) 248.896ms asymm 11

11: 176-215.access.witcom.de (217.19.176.215) 253.562ms asymm 12

12: 176-219.access.witcom.de (217.19.176.219) 137.063ms asymm 13

13: no reply

14: no reply

15: no reply

16: no reply

17: no reply

And the same output for the VM’s IP:

➜ terraform-dany git:(main) ✗ tracepath -b 178.250.167.69

1?: [LOCALHOST] pmtu 1500

1: _gateway (192.168.0.1) 351.430ms asymm 2

1: _gateway (192.168.0.1) 40.293ms asymm 2

2: 5.195.3.81 (5.195.3.81) 4.763ms

3: 94.56.186.5 (94.56.186.5) 19.766ms

4: no reply

5: 86.96.144.18 (86.96.144.18) 829.805ms asymm 6

6: 195.229.1.76 (195.229.1.76) 359.504ms

7: 195.229.3.145 (195.229.3.145) 143.151ms

8: AMDGW2.arcor-ip.net (80.249.208.123) 211.805ms

9: de-dus23f-rb01-be-1050.aorta.net (84.116.191.118) 188.953ms asymm 11

10: ip-005-147-251-214.um06.pools.vodafone-ip.de (5.147.251.214) 400.730ms asymm 11

11: 176-215.access.witcom.de (217.19.176.215) 159.937ms asymm 12

12: 176-219.access.witcom.de (217.19.176.219) 217.842ms asymm 13

13: no reply

14: no reply

15: no reply

So, somewhere in the router traffic stops…

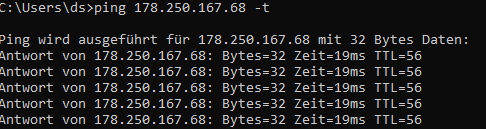

I don’t undestand this. I checked routing one more time and it looks good. I temporary allowed to respond to pings on the routers WAN interface (IP = .68). routing works fine… see below

So from DC side the routing from their gateway to our public IP net seems to be set correctly.

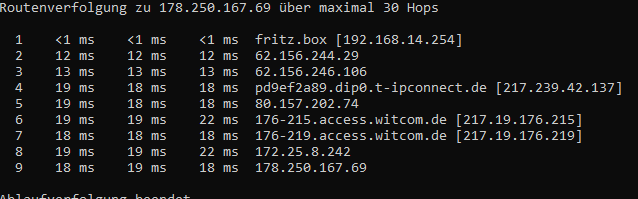

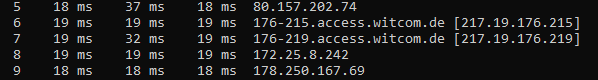

For troubleshooting I added a virtual IP (WAN IP Alias) for the .69 and pings to this adress also work fine. Trace route looks like this:

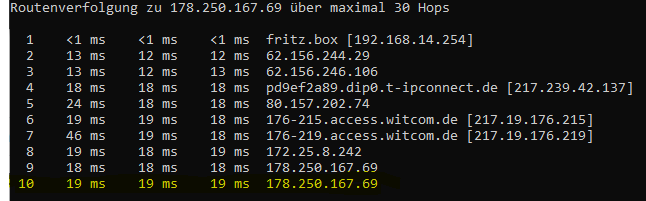

I can even redirect (port-forwarding) the .69 to the nodes LAN interface. Trace route shows an additional knot

.

.

As I said… there is no firewall or router between the DC gateway and the second NIC port of each node. The second NIC ports are connected directly to a switch where the DC uplink is connected to. The routers WAN interace also goes here and is working perfectly fine. Technicaly the WAN interface is on the same topology level than the second NIC ports. When the routers WAN side is working… public IPs on secondary NIC ports should be working too.

Just to be absolutely sure … can you please confirm the physical setup is rigth:

First NIC port of each 3node is connected to a LAN where it gets an IP and gateway by DHCP-server.

public IP address(es) will be assigned to a second physical NIC port on the node. correct?

Therefore this second NIC port has to be attached to a separate network where the public IP range is routed to, correct? So from my understanding the node has its connection to the grid via LAN and is then said from the grid-side “Hey node…please assign youself this public IP on your secondary NIC port”. correct?

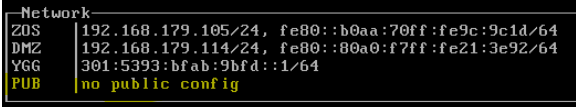

What confuses me is that ZOS still says no public config.

I really don’t understand this. Will have to further investigate.

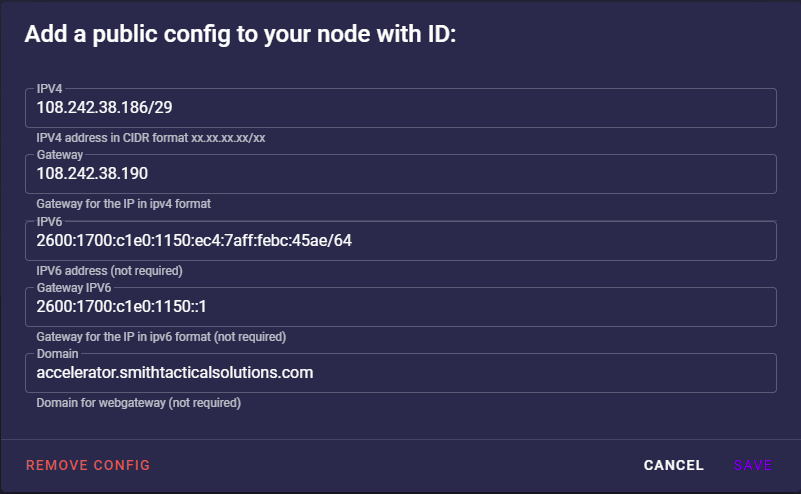

I used the TF Chain Portal to assign public IP adress(es) to the farm.

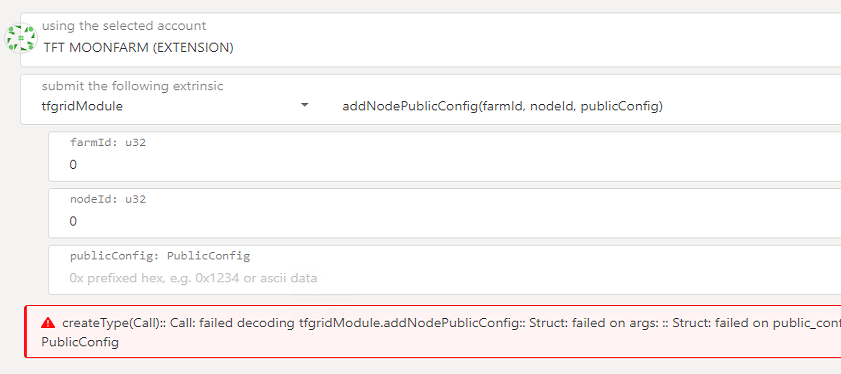

As described here (https://library.threefold.me/info/manual/#/manual__public_config) public IPs should be assigned in the polkadot UI. When I try to check this in polkadot UI I get the following errors:

![]()

any ideas?

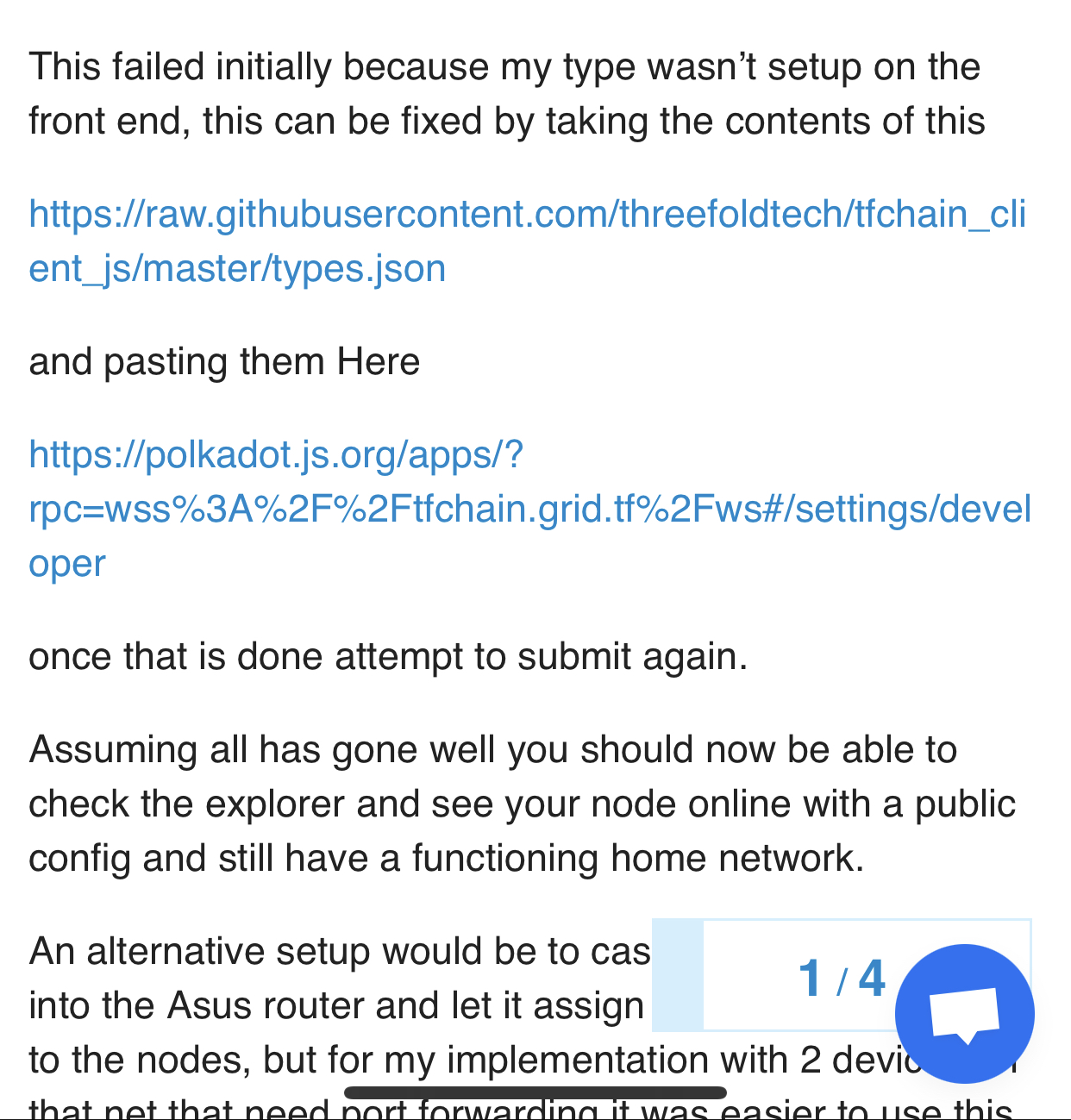

Dany that’s how I configured 2914, 3049 and 3081, I can jump on and try to help? I put a walk through in my multi gig thread aswell.

You may need to add the front end manually in the portal for the confit to apply successfully ,

Public Node Setup for the home user.

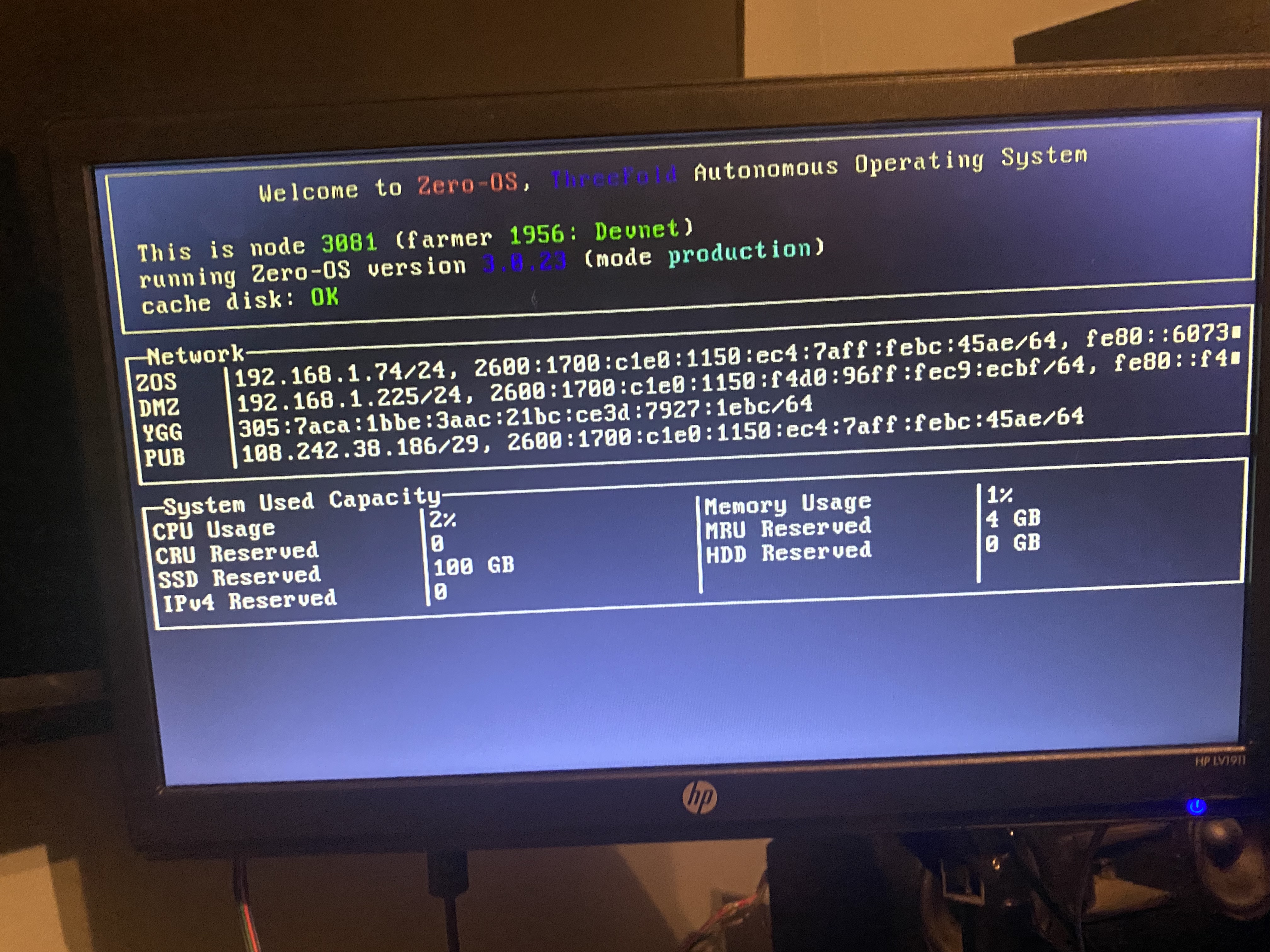

Once the config adds properly it will show a public config on the main screen

Hey parker

thanks for the advice. It’s up and running now. It seems like it’s not enough to just offer the public IP to the farm via TF Chain portal. After assigning the public IP to the particular node via polkadot UI it works.

Ah yes, I couldn’t do it in the portal either, exciting to see more gateways popping in, I’m working on getting my little home dc setup to handle 64 address block here in the next couple weeks

@weynandkuijpers could you please check one more time? It should work now.

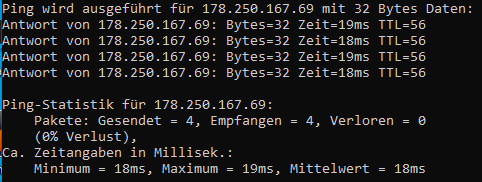

Server is pingable!. see…

trace route looks good too.

Hey parkers,

can you give me some details on your cabling? Do you have a second NIC port in use for the public IPs on the nodes? I did some more testing and have now different scenarios configured.

have you ever tested routing to the public IPs assigned to your nodes? I mean not from the inside of you network but from the web?

I’m only using a single nic connection, it seems to be creating virtual interfaces because the nodes are using Mac’s that don’t physically exist in boxes,

I have a primary gateway that has a run to each node that handles all the subnetting, it really was pretty minimal on the configuration side once I realized that the node creates another interface with a static address using the information from the public config you put in on the polka dot Ui.

I’ve never had a test run but I had the impression they were working properly, would be news to me if they weren’t

So I just have my gateway hand out private subnet addresses at boot, then when I added the public config it created the static interface with the address inside of the public subnet range

As i plan to bring some nodes to a local datacenter I’m following this thread. Seems we really could use a guide for dummies (me that is) on how to setup your node in a datacenter correctly.

theres definitely not alot of info on the gateway side, I have a few threads going with bits and pieces here

bit im workin on some bigger picture more general stuff now that will be more widely applicable as i figure it out myself. im active on discord telegram and here, let me know if i can help!

could you make sure my public ip config is working correctly on 2914,3049 or 3081, dany pointed out ive never tested it and ive been answering lots of questions assuming my setup was correct…

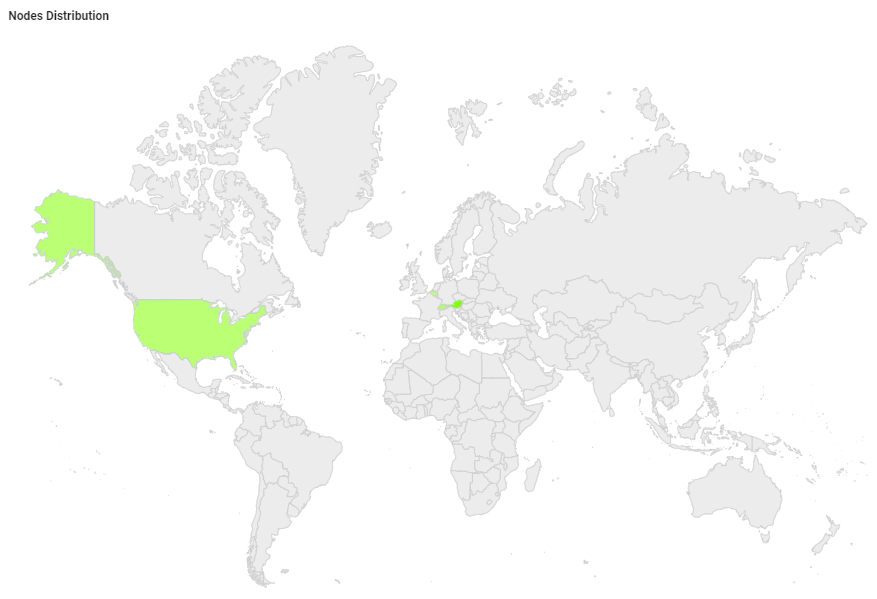

Dany, Congrats on being the first German gateway nodes!

theres only gateways in four countries right now and as far as I know, You and I are the only DIY gateways.

Hi @Dany,

All looks good!

➜ terraform-dany git:(main) ✗ ssh root@178.250.167.69

ssh: connect to host 178.250.167.69 port 22: Connection refused

➜ terraform-dany git:(main) ✗ ping 178.250.167.69

PING 178.250.167.69 (178.250.167.69) 56(84) bytes of data.

64 bytes from 178.250.167.69: icmp_seq=1 ttl=51 time=135 ms

64 bytes from 178.250.167.69: icmp_seq=2 ttl=51 time=126 ms

64 bytes from 178.250.167.69: icmp_seq=3 ttl=51 time=137 ms

64 bytes from 178.250.167.69: icmp_seq=4 ttl=51 time=120 ms

64 bytes from 178.250.167.69: icmp_seq=5 ttl=51 time=128 ms

^C

--- 178.250.167.69 ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 4002ms

rtt min/avg/max/mdev = 119.557/129.110/136.627/6.248 ms

➜ terraform-dany git:(main) ✗ ssh root@178.250.167.69

The authenticity of host '178.250.167.69 (178.250.167.69)' can't be established.

ED25519 key fingerprint is SHA256:GZduV+dbPnaRHqRwNzoO1JRVXS5eH+E7ZKeTcfzLrX8.

This host key is known by the following other names/addresses:

~/.ssh/known_hosts:33: 301:d12e:3351:4208:c32b:a725:4b6c:aa03

~/.ssh/known_hosts:35: 301:d12e:3351:4208:9a7c:cf44:d2d4:f788

~/.ssh/known_hosts:36: 301:d12e:3351:4208:6243:5c48:b899:8932

~/.ssh/known_hosts:37: 301:5393:bfab:9bfd:35f:140b:5736:7042

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '178.250.167.69' (ED25519) to the list of known hosts.

Welcome to Ubuntu 20.04.4 LTS (GNU/Linux 5.12.9 x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

This system has been minimized by removing packages and content that are

not required on a system that users do not log into.

To restore this content, you can run the 'unminimize' command.

The programs included with the Ubuntu system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

root@proxy1:~#

Congratulations! The connection refused in the beginning is because this flist start a few daemons and this takes some time. Last step in theprocess is to start sshd As you can see, it got there and works.

Let’s cable the other nodes as well

I’ll test it now…

Hi @parker, some thing does not sit wright with this setup:

Enter a value: yes

grid_network.proxy01: Creating...

grid_network.proxy01: Still creating... [10s elapsed]

grid_network.proxy01: Still creating... [20s elapsed]

grid_network.proxy01: Still creating... [30s elapsed]

grid_network.proxy01: Creation complete after 34s [id=637b3ef2-a634-49cd-ac6e-0cdb19f4e987]

grid_deployment.p1: Creating...

grid_deployment.p1: Still creating... [10s elapsed]

grid_deployment.p1: Still creating... [20s elapsed]

╷

│ Error: error waiting deployment: workload 0 failed within deployment 3608 with error could not get public ip config: public ip workload is not okay

│

│ with grid_deployment.p1,

│ on main.tf line 21, in resource "grid_deployment" "p1":

│ 21: resource "grid_deployment" "p1" {

│

I used the same terraform deployment script as for @Dany test, just replaced his 1983 node for your 3081. Please have a look at the farm setup in the https://portal.grid.tf in the farm / public ip section (you should have a /something defined there which is not a /32.

@Dany, any more suggestions to check?

Yeah…Great!! Thank you very much @weynandkuijpers.

We will connect the other nodes as soon as possible. I’ll let you know when it’s done!

Thanks again!

this is my config under the node specifically,

this is under the farm