Server 1983

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

grid_network.proxy01: Creating...

grid_network.proxy01: Still creating... [10s elapsed]

grid_network.proxy01: Still creating... [20s elapsed]

grid_network.proxy01: Still creating... [30s elapsed]

grid_network.proxy01: Still creating... [40s elapsed]

grid_network.proxy01: Creation complete after 45s [id=6a509d6e-ce42-45b4-b5b8-560d115ef5f2]

grid_deployment.p1: Creating...

grid_deployment.p1: Still creating... [10s elapsed]

grid_deployment.p1: Still creating... [20s elapsed]

grid_deployment.p1: Still creating... [30s elapsed]

grid_deployment.p1: Creation complete after 33s [id=3572]

Apply complete! Resources: 2 added, 0 changed, 0 destroyed.

Outputs:

public_ip = "178.250.167.69/26"

ygg_ip = "301:5393:bfab:9bfd:35f:140b:5736:7042"

➜ terraform-dany git:(main) ✗

And then using the public IP to log in with SSH does not connect. Quick change to the planetary network IP and then ip a:

root@proxy1:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether be:6a:b7:55:fb:a0 brd ff:ff:ff:ff:ff:ff

inet 10.1.2.2/24 brd 10.1.2.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fd63:6a56:3957:2::2/64 scope global

valid_lft forever preferred_lft forever

inet6 fe80::bc6a:b7ff:fe55:fba0/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 1e:40:27:8a:f7:56 brd ff:ff:ff:ff:ff:ff

inet 178.250.167.69/26 brd 178.250.167.127 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::1c40:27ff:fe8a:f756/64 scope link

valid_lft forever preferred_lft forever

4: eth2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether e2:0d:ad:a9:66:e2 brd ff:ff:ff:ff:ff:ff

inet6 301:5393:bfab:9bfd:35f:140b:5736:7042/64 scope global

valid_lft forever preferred_lft forever

inet6 fe80::e00d:adff:fea9:66e2/64 scope link

valid_lft forever preferred_lft forever

5: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:5e:8b:75:d1 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

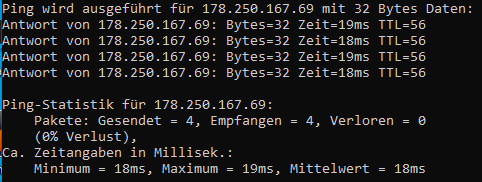

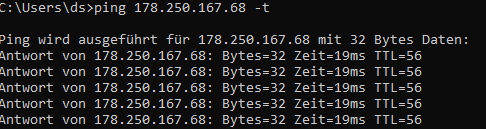

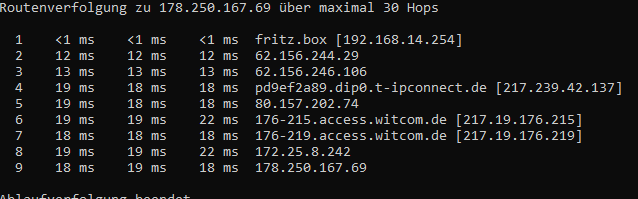

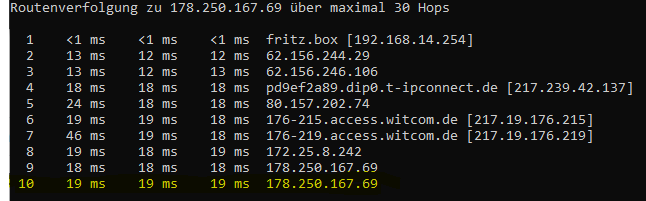

Ip address is assigned to an interface, but from outside the routing is not 100% yet. Again from my laptop:

➜ terraform-dany git:(main) ✗ ping 178.250.167.69

PING 178.250.167.69 (178.250.167.69) 56(84) bytes of data.

And from within the VM pinging 1.1.1.1 from the public interface:

root@proxy1:~# ping -I eth1 1.1.1.1

PING 1.1.1.1 (1.1.1.1) from 178.250.167.69 eth1: 56(84) bytes of data.

It does not route properly to the outside world. Is it VM routing or routing in the switch / router?

root@proxy1:~# ip r

default via 178.250.167.65 dev eth1

10.1.0.0/16 via 10.1.2.1 dev eth0

10.1.2.0/24 dev eth0 proto kernel scope link src 10.1.2.2

100.64.0.0/16 via 10.1.2.1 dev eth0

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

178.250.167.64/26 dev eth1 proto kernel scope link src 178.250.167.69

root@proxy1:~#

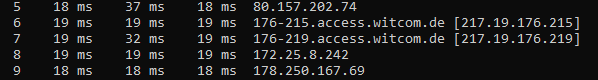

So in conclusion:

- planetary network ingress and egress works

- public (IPv4) does not work. My best guess is that there is a routing issue somewhere in the switch / router. If I ping the default gateway:

root@proxy1:~# ip r

default via 178.250.167.65 dev eth1

10.1.0.0/16 via 10.1.2.1 dev eth0

10.1.2.0/24 dev eth0 proto kernel scope link src 10.1.2.2

100.64.0.0/16 via 10.1.2.1 dev eth0

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

178.250.167.64/26 dev eth1 proto kernel scope link src 178.250.167.69

root@proxy1:~# ping 178.250.167.65

PING 178.250.167.65 (178.250.167.65) 56(84) bytes of data.

From 178.250.167.69 icmp_seq=1 Destination Host Unreachable

From 178.250.167.69 icmp_seq=2 Destination Host Unreachable

From 178.250.167.69 icmp_seq=3 Destination Host Unreachable

From 178.250.167.69 icmp_seq=4 Destination Host Unreachable

From 178.250.167.69 icmp_seq=5 Destination Host Unreachable

From 178.250.167.69 icmp_seq=6 Destination Host Unreachable

From 178.250.167.69 icmp_seq=7 Destination Host Unreachable

From 178.250.167.69 icmp_seq=8 Destination Host Unreachable

From 178.250.167.69 icmp_seq=9 Destination Host Unreachable

It does not reply.

.

.