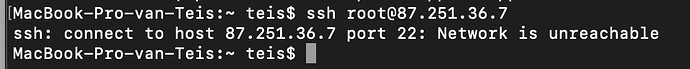

Hi, not sure who to contact but I have been deploying VM’s on node 1655, farmID 84 (Terminator). The network configuration is not done properly. AFAICS I have provisioned an IPv4 address:

{

"version": 0,

"contractId": 14356,

"nodeId": 1655,

"name": "VMc02ecdfc",

"created": 1673433242,

"status": "ok",

"message": "",

"flist": "https://hub.grid.tf/tf-official-vms/ubuntu-18.04-lts.flist",

"publicIP": {

"ip": "87.251.36.6/24",

"ip6": "",

"gateway": "87.251.36.1"

},

"planetary": "304:5069:f7aa:c456:ede2:6255:b0c8:8607",

"interfaces": [

{

"network": "NW61a62ca3",

"ip": "10.20.2.2"

}

],

"capacity": {

"cpu": 4,

"memory": 4096

},

"mounts": [

{

"name": "DISK84fd1857",

"mountPoint": "/",

"size": 53687091200,

"state": "ok",

"message": ""

}

],

"env": {

"SSH_KEY": "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDAOP0h6VImNcxnIBRMoMfbMfb0xwGHDlaPxZ+nu0CL8ATJekVDHDLMGEPdvACfHBe0sqIw/l6jqoEMR4Dzhjgm4bVEUBVEnG1FvkeNB59sT2DOxDCZuqJvjx2M1bJlH8AR/JQXxUQ+zvfTbavc4/zfCuJm4PYNUsmEt/IQmRwLznGOkoJbwYLhKCC3ykZd0EGpmCWgUUYn0ihaaYkyrliQi5Ny00x0s6jOIJg0CG2Xh5xcrkhOfCZMxZAB+/LGQpZ3tu+Cy5jRf8V/JZ8XQmtYM2GmBUZ1KGcMcsGzrtuudn13JeYLtWJBw6A7Q3Fb7dQSCMLC9UA0uMSZk67M6DFV john@RescuedMac"

},

"entrypoint": "/init.sh",

"metadata": "{\"type\":\"vm\",\"name\":\"VMc02ecdfc\",\"projectName\":\"Fullvm\"}",

"description": "",

"corex": false

}

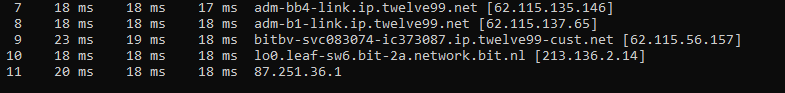

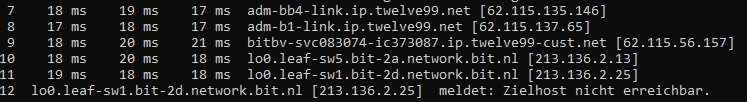

But pinging it shows a routing issue inside the DC network or switch / router connecting the 3nodes:

➜ ~ ping 87.251.36.6

PING 87.251.36.6 (87.251.36.6) 56(84) bytes of data.

From 213.136.2.25 icmp_seq=1 Destination Host Unreachable

From 213.136.2.25 icmp_seq=2 Destination Host Unreachable

From 213.136.2.25 icmp_seq=3 Destination Host Unreachable

From 213.136.2.25 icmp_seq=4 Destination Host Unreachable

and a tracepath

root@meet:~# tracepath 1.1.1.1

1?: [LOCALHOST] pmtu 1500

1: ??? 1403.418ms !H

Resume: pmtu 1500

root@meet:~#

Or:

ping meet.mytrunk.org

PING meet.mytrunk.org (87.251.36.6) 56(84) bytes of data.

From lo0.leaf-sw1.bit-2d.network.bit.nl (213.136.2.25) icmp_seq=1 Destination Host Unreachable

From lo0.leaf-sw1.bit-2d.network.bit.nl (213.136.2.25) icmp_seq=2 Destination Host Unreachable

From lo0.leaf-sw1.bit-2d.network.bit.nl (213.136.2.25) icmp_seq=3 Destination Host Unreachable

from within the VM (you can connect to it over the planetary network):

root@meet:~# ping 1.1.1.1

PING 1.1.1.1 (1.1.1.1) 56(84) bytes of data.

From 87.251.36.6 icmp_seq=1 Destination Host Unreachable

Also a tracepath from the VM does this:

root@meet:~# tracepath 1.1.1.1

1?: [LOCALHOST] pmtu 1500

1: ??? 1403.418ms !H

Resume: pmtu 1500

root@meet:~#