Greetings,

I managed to deploy a qsfs on the testnet with terraform from the examples on github: https://github.com/threefoldtech/terraform-provider-grid/blob/development/examples/resources/qsfs/main.tf

Now I want to access it to upload some files and perform a few tests.

How do I access the server? I’m used to just ssh-ing into a vm.

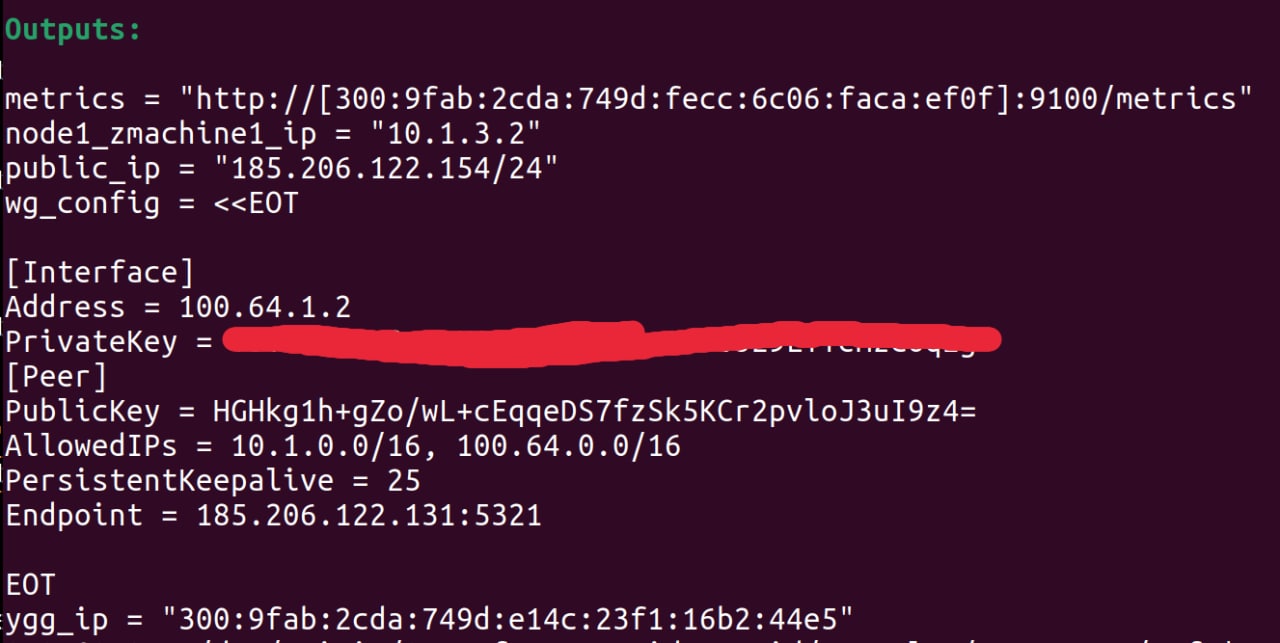

For clarity, here is my output:

And my terraform config:

terraform {

required_providers {

grid = {

source = "threefoldtech/grid"

}

}

}

provider "grid" {

}

locals {

metas = ["meta1", "meta2", "meta3", "meta4"]

datas = ["data1", "data2", "data3", "data4"]

}

resource "grid_network" "net1" {

nodes = [7]

ip_range = "10.1.0.0/16"

name = "network"

description = "newer network"

add_wg_access = true

}

resource "grid_deployment" "d1" {

node = 7

dynamic "zdbs" {

for_each = local.metas

content {

name = zdbs.value

description = "description"

password = "password"

size = 10

mode = "user"

}

}

dynamic "zdbs" {

for_each = local.datas

content {

name = zdbs.value

description = "description"

password = "password"

size = 10

mode = "seq"

}

}

}

resource "grid_deployment" "qsfs" {

node = 7

network_name = grid_network.net1.name

ip_range = lookup(grid_network.net1.nodes_ip_range, 7, "")

qsfs {

name = "qsfs"

description = "description6"

cache = 10240 # 10 GB

minimal_shards = 2

expected_shards = 4

redundant_groups = 0

redundant_nodes = 0

max_zdb_data_dir_size = 512 # 512 MB

encryption_algorithm = "AES"

encryption_key = "4d778ba3216e4da4231540c92a55f06157cabba802f9b68fb0f78375d2e825af"

compression_algorithm = "snappy"

metadata {

type = "zdb"

prefix = "hamada"

encryption_algorithm = "AES"

encryption_key = "4d778ba3216e4da4231540c92a55f06157cabba802f9b68fb0f78375d2e825af"

dynamic "backends" {

for_each = [for zdb in grid_deployment.d1.zdbs : zdb if zdb.mode != "seq"]

content {

address = format("[%s]:%d", backends.value.ips[1], backends.value.port)

namespace = backends.value.namespace

password = backends.value.password

}

}

}

groups {

dynamic "backends" {

for_each = [for zdb in grid_deployment.d1.zdbs : zdb if zdb.mode == "seq"]

content {

address = format("[%s]:%d", backends.value.ips[1], backends.value.port)

namespace = backends.value.namespace

password = backends.value.password

}

}

}

}

vms {

name = "vm"

flist = "https://hub.grid.tf/tf-official-apps/base:latest.flist"

cpu = 2

memory = 1024

entrypoint = "/sbin/zinit init"

publicip = true

planetary = true

env_vars = {

SSH_KEY = "ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIIwVBFL95gmLMcck2XVlZIKNDDOEWq09q8xFtsiMb7JU toto@adastraindustries.com"

}

mounts {

disk_name = "qsfs"

mount_point = "/qsfs"

}

}

}

output "metrics" {

value = grid_deployment.qsfs.qsfs[0].metrics_endpoint

}

output "ygg_ip" {

value = grid_deployment.qsfs.vms[0].ygg_ip

}

output "public_ip" {

value = grid_deployment.qsfs.vms[0].computedip

}

output "node1_zmachine1_ip" {

value = grid_deployment.qsfs.vms[0].ip

}

output "wg_config" {

value = grid_network.net1.access_wg_config

}