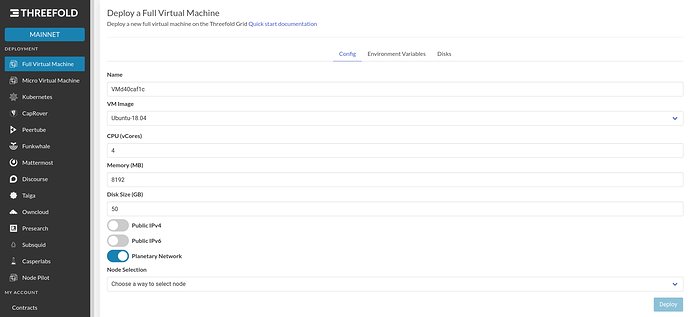

Full virtual machines (VMs) have been available on the Grid for a while, but with the 3.7 release, it’s now very simple to deploy them through the playground on mainnet:

So, what’s a full VM, and why is this important?

Full and Micro VMs

Let’s start by noting the other kind of VM available on the Grid, the “micro” kind. These were the default VM type in the playground until the recent update, and they sometime caused some confusion for users who expected them to behave like a VPS available from other providers. That’s in essence what full VMs provide, a familiar and feature compatible offering to VMs one might run locally or in a cloud environment.

Micro VMs are basically a container image, as used in Docker or Kubernetes, that’s been promoted to run as a VM. Container images don’t include their own kernel, because they usually share the kernel from the host. Zero OS provides each micro VM its own basic kernel. This means that micro VMs are more isolated than containers, and this is more secure, especially with multiple users running workloads on the same 3Node. At the same time, they are still rather lightweight compared to a full VM and don’t have all the same capabilities.

What can you do with a full VM?

Full VMs are capable of anything you can do with a Linux server. They should be compatible with any guides and tutorials written for the same version of the distribution they are running.

What exactly can they do that micro VMs can’t? Here are a couple examples:

- Run and manage services using

systemd(it’s technically possible for micro VMs to includesystemdor for a full VM to not include it, but that’s not common and not the case in our official images) - Use software the requires certain kernel modules (using WireGuard inside a VM is one example that didn’t work for me in a micro VM)

We currently have several versions of Ubuntu available as default full VM options, and you can also enter any full VM flist link as an “other” option. See our official VMs section of the flist hub, for a couple more options. See the Cockpit VM images from @ParkerS for an example of what’s possible with full VMs, that you can deploy for yourself.

If you’re interested in adding new or customized VM images to the Grid, I recommend this post by @linkmark, as well as the Zos documentation on Zmachine.