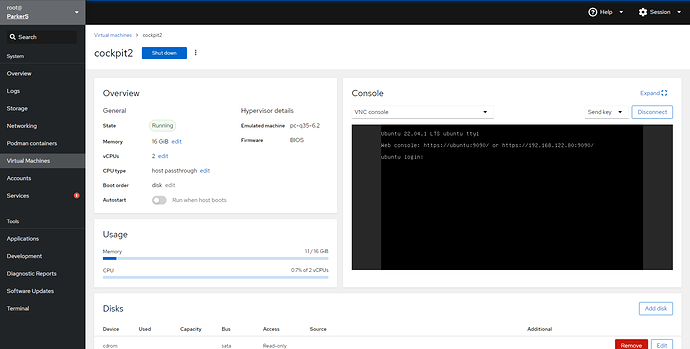

9/27 update

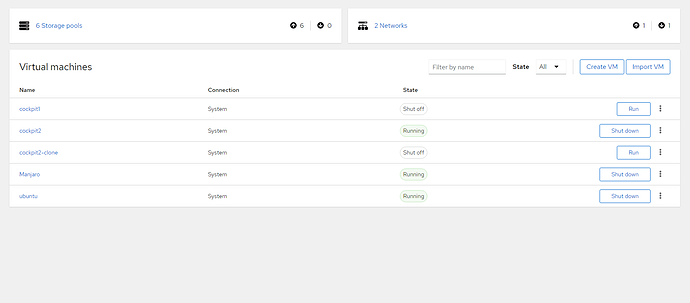

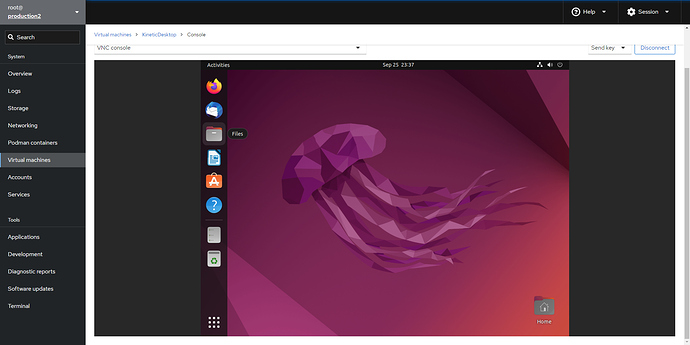

wanted to get the break down of how i got this up and running out there. this is an amazing tool to combine with your ubuntu 22.04 full vm and it does work with planetary addresses. unfortunately I cant get the 22.04 vm to deploy with only a planetary address

----from the ssh console of your freshly booted ubuntu 22.04 vm you will need to,

apt update

apt install Network-Manager

cd /etc/netplan

ls -l

edit the netplan with Nano netplan file name

delete “version 2” from bottom

change

networks:

ethernets:

to

networks:

version: 2

renderer: NetworkManger

ethernets:

Cntrl X to save confirm write with “y”

systemctl disable Systemd.Networkd $$ systemctl enable NetworkManager

netplan apply

apt -upgrade

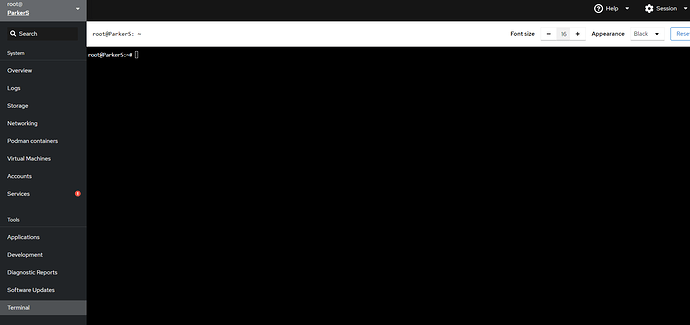

apt install cockpit

apt install cockpit-machine

apt install cockpit-podman

apt install cockpit-sosreports

apt install cockpit-pcp

apt install cockpit-tests

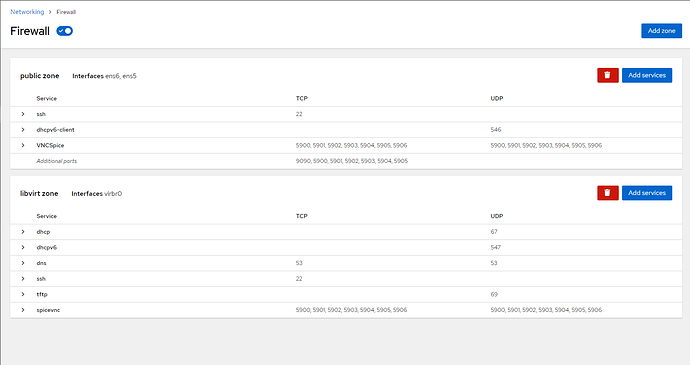

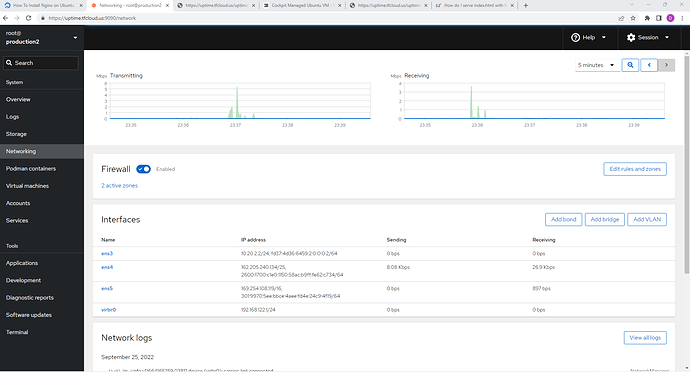

apt install firewalld

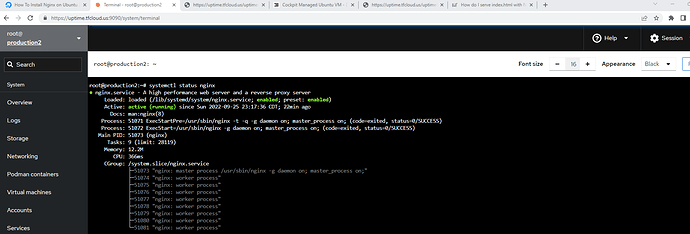

systemctl start cockpit

passwd root

GUI interface now accessible and fully functional at the public and planetary ip.

login requires password, login must match ssh key to access sudo

if you start firewalld it will remove the access to the web console until you have added 9090 to the correct zone and added cockpit as an allowed service

video setup tutorial to follow.

@weynandkuijpers you have a log in to my running example in your pms, im hoping we can get this into an flist, i have tried and failed miserably